Another day, another lawsuit. Apple is being sued for failing to curtail child sexual abuse material on iCloud, reports The New York Times (a subscription is required to read the article).

Part of the US$1.2 billion lawsuit involves Apple’s decision to abandon plans for scanning iCloud for photos of child sexual abuse material (CSAM). Filed in Northern California on Saturday, the lawsuit represents a potential group of 2,680 victims and alleges that Apple’s failure to implement previously announced child safety tools has allowed harmful content to continue circulating, causing ongoing harm to victims.

In September 2021, Apple delayed its controversial CSAM detection system and child safety features. In a statement at the time, Apple had this to say: Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material. Based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.

About the CSAM plan

On August 6, 2021 Apple previewed new child safety features for its various devices coming later that year. However, the announcement has stirred up some controversy. Here’s the gist of it:

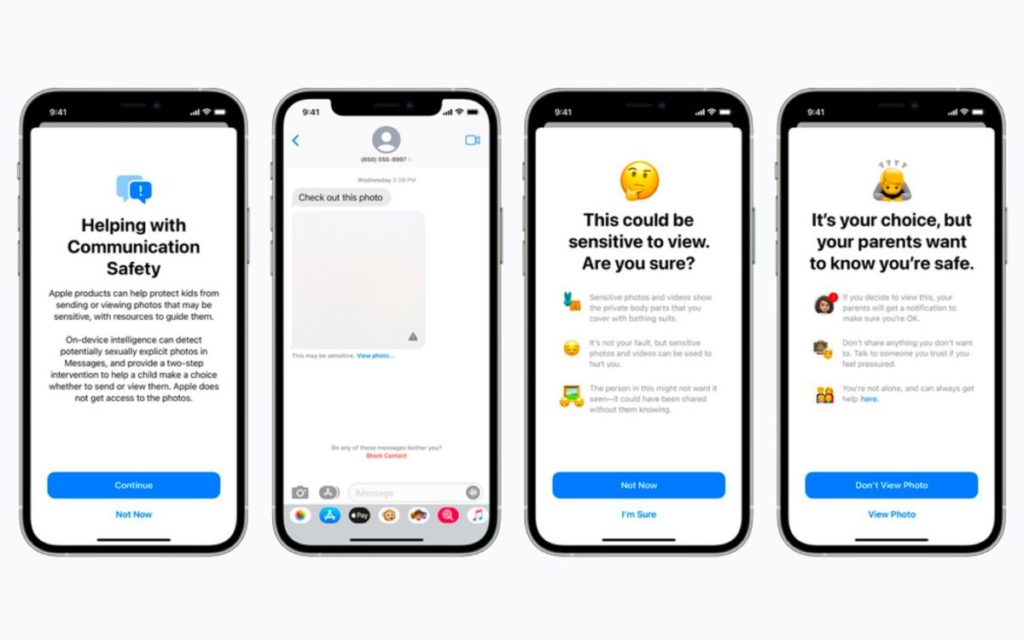

Apple is introducing new child safety features in three areas, developed in collaboration with child safety experts. First, new communication tools will enable parents to play a more informed role in helping their children navigate communication online. The Messages app will use on-device machine learning to warn about sensitive content, while keeping private communications unreadable by Apple.

Next, iOS and iPadOS will use new applications of cryptography to help limit the spread of CSAM online, while designing for user privacy. CSAM detection will help Apple provide valuable information to law enforcement on collections of CSAM in iCloud Photos.

Finally, updates to Siri and Search provide parents and children expanded information and help if they encounter unsafe situations. Siri and Search will also intervene when users try to search for CSAM-related topics.

The Controversy

However, the announcement was met with major controversy. For example, Fight for the Future, a nonprofit advocacy group for digital rights, launched nospyphone.com. It was a web page designed to give individuals a way to directly contact Apple to oppose its child protection plan that involves scanning devices for child abuse images.

Also, employees spoke out internally about Apple’s plans, reported Reuters. An August 13, 2021 article said employees of the tech giant had flooded an Apple internal Slack channel with more than 800 messages on the plan announced a week ago. Many expressed worries that the feature could be exploited by repressive governments looking to find other material for censorship or arrests.

I hope you’ll help support Apple World Today by becoming a patron. Patreon pricing ranges from $2 to $10 a month. Thanks in advance for your support.

Article provided with permission from AppleWorld.Today