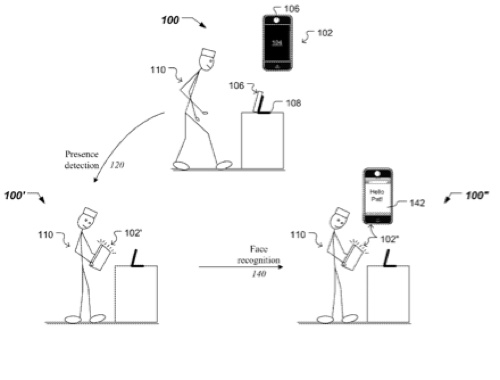

Future OS X and iOS devices may recognize your face when you look at them, if an Apple patent (number 20110317872) for “low threshold face recognition” comes to fruition.

Methods, systems, and apparatus, including computer programs encoded on a computer storage medium, are disclosed for reducing the impact of lighting conditions and biometric distortions, while providing a low-computation solution for reasonably effective (low threshold) face recognition. In one aspect, the methods include processing a captured image of a face of a user seeking to access a resource by conforming a subset of the captured face image to a reference model.

The reference model corresponds to a high information portion of human faces. The methods further include comparing the processed captured image to at least one target profile corresponding to a user associated with the resource, and selectively recognizing the user seeking access to the resource based on a result of said comparing. Robert Mikio Free is the inventor.

Here’s Apple’s background and summary of the invention: “This specification relates to low threshold face recognition, e.g., a face recognition system that can tolerate a certain level of false positives in making face recognition determinations. Most face recognition systems fall into one of two categories. A first category system tends to be robust and can tackle various lighting conditions, orientations, scale and the like, and tends to be computationally expensive.

“A second category system is specialized for security-type applications and can work under controlled lighting conditions. Adopting the first category systems for face recognition on consumer operated portable appliances that are equipped with a camera would unnecessarily use an appliance’s computing resources and drain its power. Moreover, as the consumer portable appliances tend to be used both indoor and outdoor, the second category systems for face recognition may be ineffective.

“Such ineffectiveness may be further exacerbated by the proximity of the user to the camera, i.e., small changes in distance to and tilt of the appliance’s camera dramatically distort features, making traditional biometrics used in security-type face recognition ineffective.

“This specification describes technologies relating to reducing the impact of lighting conditions and biometric distortions, while providing a low-computation solution for reasonably effective (low threshold) face recognition that can be implemented on camera-equipped consumer portable appliances.

“In general, one aspect of the subject matter described in this specification can be implemented in methods performed by an image processor that include the actions of processing a captured image of a face of a user seeking to access a resource by conforming a subset of the captured face image to a reference model. The reference model corresponds to a high information portion of human faces.

“The methods further include comparing the processed captured image to at least one target profile corresponding to a user associated with the resource, and selectively recognizing the user seeking access to the resource based on a result of said comparing.

“These and other implementations can include one or more of the following features. In some cases, the high information portion includes eyes and a mouth. In some other cases, the high information portion further includes a tip of a nose. Processing the captured image can include detecting a face within the captured image by identifying the eyes in an upper one third of the captured image and the mouth in the lower third of the captured image.

“The reference model includes a reference image of a face, and processing the captured image further can include matching the eyes of the detected face with eyes of the face in the reference image to obtain a normalized image of the detected face. Additionally, processing the captured image can further include vertically scaling a distance between an eyes-line and the mouth of the detected face to equal a corresponding distance for the face in the reference image in order to obtain the normalized image of the detected face. In addition, processing the captured image can further include matching the mouth of the detected face to the mouth of the face in the reference image in order to obtain the normalized image of the detected face.

“In some implementations, comparing the processed captured image can include obtaining a difference image of the detected face by subtracting the normalized image of the detected face from a normalized image of a target face associated with a target profile. Comparing can further include calculating scores of respective pixels of the difference image based on a weight defined according to proximity of the respective pixels to high information portions of the human faces.

“The weight decreases with a distance from the high information portions of the human faces. For example, the weight decreases continuously with the distance from the high information portions of the human faces. As another example, the weight decreases discretely with the distance from the high information portions of the human faces. As yet another example, the weight decreases from a maximum weight value at a mouth-level to a minimum value at an eyes-line.

“In some implementations, selectively recognizing the user can include presenting to the user a predetermined indication according to a user’s profile. The resource can represent an appliance, and the methods can further include capturing the image using an image capture device of the appliance. Selectively recognizing the user can include turning on a display of the appliance, if the display had been off prior to the comparison.

“In some implementations, processing the captured image can include applying an orange-distance filter to the captured image, and segmenting a skin-tone orange portion of the orange-distance filtered image to represent a likely presence of a face in front of the image capture device. Processing the captured image can further include determining changes in area and in location of the skin-tone orange portion of the captured image relative to a previously captured image to represent likely movement of the face in front of the image capture device. Also, processing the captured image further can include detecting a face within the skin-tone orange portion of the orange-distance filtered image when the determined changes are less than predetermined respective variations.

“According to another aspect, the described subject matter can also be implemented in an appliance including a data storage device configured to store profiles of users associated with the appliance. The appliance further includes an image capture device configured to acquire color frames. Further, the appliance includes one or more data processors configured to apply an orange-distance filter to a frame acquired by the image capture device.

“The one or more data processors are further configured to determine respective changes in area and location of a skin-tone orange portion of the acquired frame relative to a previously acquired frame, and to infer, based on the determined changes, a presence of a face substantially at rest when the frame was acquired. Further, the one or more data processors is configured to detect a face corresponding to the skin-tone orange portion of the acquired frame in response to the inference, the detection including finding eyes and a mouth within the skin-tone orange portion.

“Furthermore, the one or more data processors are configured to normalize the detected face based on locations of eyes and a mouth of a face in a reference image. In addition, the one or more data processors are configured to analyze weighted differences between normalized target faces and the normalized detected face. The analysis includes weighting portions of a face based on information content corresponding to the portions. The target faces are associated with respective users of the appliance.

“Additionally, the one or more data processors are configured to match the face detected in the acquired frame with one of the target faces based on a result of the analysis, and to acknowledge the match of the detected face in accordance with a profile stored on the data storage device and associated with the matched user of the appliance.

“These and other implementations can include one or more of the following features. The data storage device is further configured to store rules for analyzing the weighted differences including weighting rules and scoring rules, and rules for matching the detected face against target faces.

“Particular implementations of the subject matter described in this specification can be configured to realize one or more of the following potential advantages. The techniques and systems disclosed in this specification can reduce the impact of lighting and emphasize skin variance. By acquiring images with the appliance’s own image capture device, the approximate location and orientation of face features can be pre-assumed and can avoid the overhead of other face recognition systems.

“The disclosed methods can ignore face biometrics, and rather use feature locations to normalize an image of a test face. Further, the face recognition techniques are based on a simple, weighted difference map, rather than traditional (and computationally expensive) correlation matching.”

— Dennis Sellers