Apple has been granted a patent (number 11,119,573) that takes face recognition to the next level. It involves Apple devices reacting to pupil modulation — that is, changes in the size of your pupils.

About the patent

In the patent, dubbed “pupil modulation as a cognitive control signal,” Apple notes that electronic devices have different capabilities with respect to viewing and interacting with electronic content. A variety of input mechanisms have been incorporated into a variety of user devices to provide functionality and user interaction (e.g., keyboards, mice, touchscreens, buttons, microphones for voice commands, optical sensors, etc.).

However, Apple notes that many devices, can have limited device interaction and control capabilities due to device size constraints, display size constraints, operational constraints, etc. For example, small or thin user devices can have a limited number of physical buttons for receiving user input.

Similarly, small user devices can have touchscreens with limited space for providing virtual buttons or other virtual user interface elements. In addition, some devices can have buttons or other interactive elements that are unnatural, cumbersome, or uncomfortable to use in certain positions or in certain operating conditions.

For example, it may be cumbersome to interact with a device using both hands (e.g., holding a device in one hand while engaging interface elements with the other). In another example, it may be difficult to press small buttons or engage touchscreen functions while a user’s hands are otherwise occupied or unavailable (e.g., when wearing gloves, carrying groceries, holding a child’s hand, driving, etc.). Apple thinks one answer may be a device responding to changes in the pupil dilation or modulation of a user.

Summary of the patent

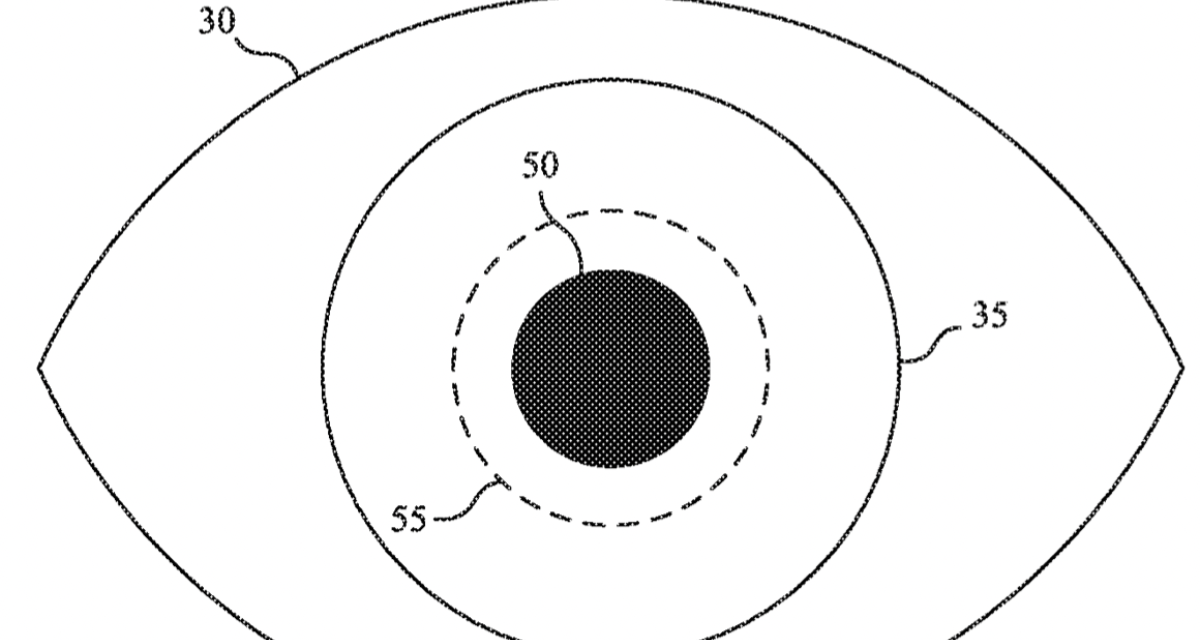

Here’s Apple’s abstract of the patent: “One exemplary implementation provides an improved user experience on a device by using physiological data to initiate a user interaction for the user experience based on an identified interest or intention of a user. For example, a sensor may obtain physiological data (e.g., pupil diameter) of a user during a user experience in which content is displayed on a display. The physiological data varies over time during the user experience and a pattern is detected. The detected pattern is used to identify an interest of the user in the content or an intention of the user regarding the content. The user interaction is then initiated based on the identified interest or the identified intention.”

Figure 2 illustrates a device displaying content and obtaining physiological data from a user.

Article provided with permission from AppleWorld.Today