Apple has been granted a patent (number 11496723) for “automatically capturing a moment.” It involves being able to capture pics or videos with your iPhone’s camera — even if it means doing so virtually.

About the patent

Today’s electronic devices provide users with many ways to capture the world around them. Often, personal electronic devices such as the iPhone come with one or more cameras that allow users to capture innumerable photos of their loved ones and important moments. Photos or videos allow a user to revisit a special moment.

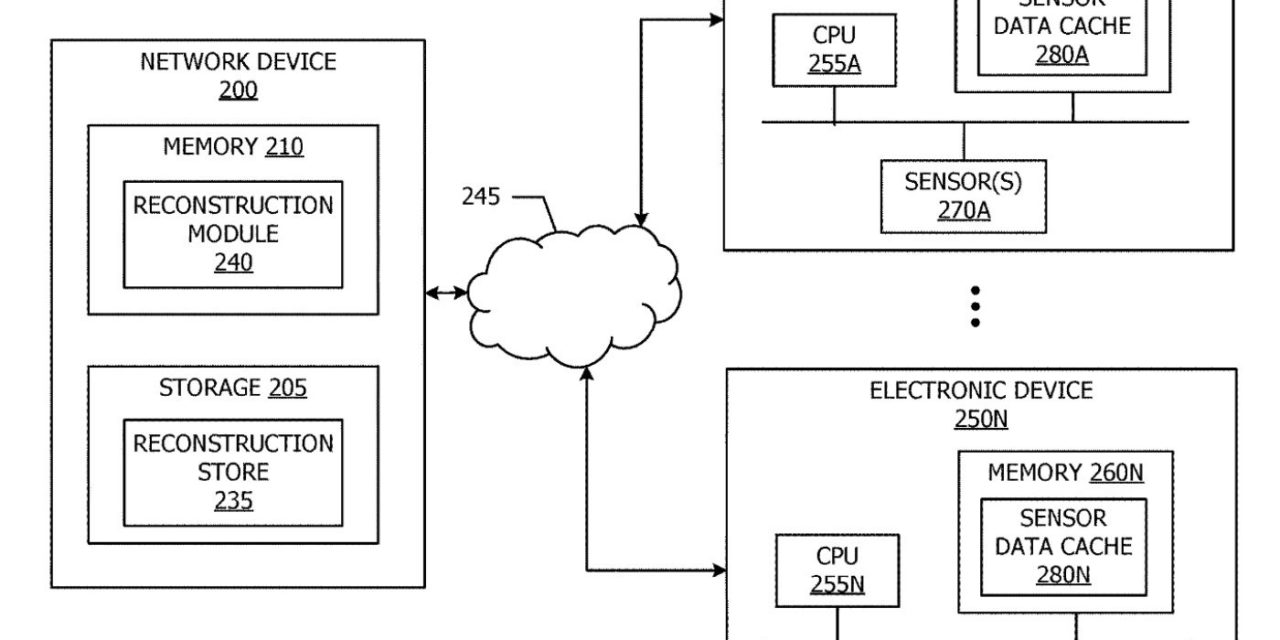

Apple notes, that, however, often by the time a user opens a camera application and points a capture device at a scene, the important moment may have passed. The tech giant’s solutions involves systems, methods, and computer readable media for providing a virtual representation of a scene.

In general, techniques are disclosed to provide a technique for detecting a triggering event to obtain sensor data from which the virtual representation may be recreated. The virtual representation of the scene may include at least a partial 3D representation of the scene, a 360 degree view of a scene, or the like. In response to detecting the triggering event, a system may obtain sensor data from two or more capture devices.

The sensor data may be obtained from cached sensor data for each of the capture devices, and may be obtained for a particular time, or a particular time window (e.g, 5 relevant seconds, etc.). The time or time window may be a predetermined amount of time before the indication is detected, a time at which the indication is detected, or a predetermined time after the indication is detected.

What’s more, the time or time window for which the sensor data is obtained may vary dynamically, for example based on context or user input. The sensor data obtained may be used to generate the virtual representation of the scene, and the virtual representation may be store, either locally or on network storage.

Summary of the patent

Here’s Apple’s abstract of the patent: “Generating a representation of a scene includes detecting an indication to capture sensor data to generate a virtual representation of a scene in a physical environment at a first time, in response to the indication obtaining first sensor data from a first capture device at the first time, obtaining second sensor data from a second capture device at the first time, and combining the first sensor data and the second sensor data to generate the virtual representation of the scene.”

Article provided with permission from AppleWorld.Today