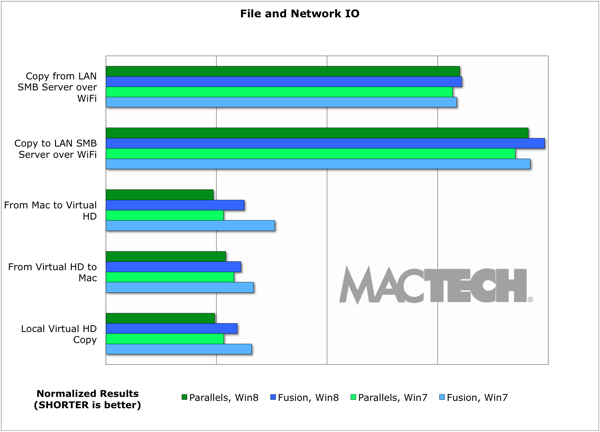

File and Network IO Tests

Contents

One of the common problems with File and Network IO Tests is caching. In fact, it’s common for benchmarkers to think they are avoiding caching when in fact, they aren’t. In the case of File and Network IO tests, there are two types of caching: in the host OS and in the guest OS. Furthermore, sometimes with groups of small files, we’ve seen performance changes that were unexplained (almost looked like caching but clearly can’t be) even after restarts of both the host and guest OS.

Worse yet, SSD’s and virtualization products tend to do sophisticated things with data containing blocks of zeroes such as data compression. Since all the major modern formats have data without zero blocks, it’s best to test using files that are real world (e.g., no zero blocks). For example, files that never have blocks of zeroes include: MP3, all the main graphics formats, zip/rar, html, dmg, pdf, etc.

In our tests, we used a set of four different 1 GB files with random non-zero data to give the most representative results and avoided caching. More specifically:

Sets of one or four 1 GB files with randomized data. To generate the file of exactly 1 GB, we used the dd command:

sudo dd if=/dev/urandom of=file1G-1 bs=$[1024*1024] count=1024

The idea behind these sets of four 1 GB files is to move enough data that we can see both real throughput and avoid caching at all levels.

In most cases, Parallels was a bit to somewhat faster than Fusion. The biggest exceptions to this were file copies over Wi-Fi to and from the SMB sever, where they both performed similarly, and with local file copy in OS X as a guest where Fusion was faster.